Talking to the Moon: World’s First Multimodal Foundation Model for Lunar Exploration and Resources

The same AI methods that power ChatGPT can now allow you to talk to the Moon

Its good to be skeptical when applying Foundation models to science. We’re now seeing good evidence that aggregating physical lunar data, and making it agentic, will unlock a new era of exploration.”

TX, UNITED STATES, January 15, 2026 /EINPresswire.com/ -- The Frontier Development Lab (FDL.ai) LunarLab, a partnership between the Luxembourg Space Agency (LSA), the European Space Resources Innovation Centre (ESRIC), and Trillium Technologies, today announced Lunar-FM, the first AI foundation model dedicated to lunar exploration and resource prospecting.— James Parr - CEO, Trillium Technologies

Developed with compute and technical support from Google Cloud, NVIDIA, SCAN Computers International Ltd, and Datarock, Lunar-FM represents a novel application of self-supervised Artificial Intelligence designed to address the data fragmentation and heterogeneity inherent in current lunar remote sensing.

Addressing Data Heterogeneity in Lunar Science

Scientific data regarding the Moon is currently siloed across disparate archives and instrument types, ranging from optical imagery and thermal radiometry to gravity anomalies.

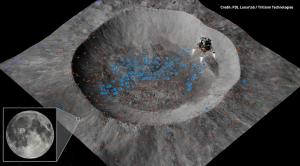

Lunar-FM integrates 18 distinct data layers from multiple orbital missions, including NASA’s Lunar Reconnaissance Orbiter (LRO), GRAIL, and Clementine, into a unified, multimodal architecture.

The model ingest includes optical imagery, topography (LOLA), thermal emissions (Diviner), radar reflectivity (Mini-RF), and gravity anomalies (GRAIL). Utilizing Transformers, the same AI method behind products such as ChatGPT, Lunar-FM creates a unified, 768-dimensional product that captures lunar properties up to 70 degrees north and south of the equator.

Key Technical Findings and Capabilities

Lunar-FM comes with several validated capabilities relevant to planetary science and in-situ resource utilization (ISRU):

-Few-Shot Learning of Lunar Resources: Validation tests demonstrated the model’s ability to generate high-fidelity global predictive maps of valuable resources from extremely scarce ground-truth data. In a case study scoping Titanium Dioxide (TiO2) abundance on the lunar surface, the model produced a global map correlating only eight expert-labeled samples from the Apollo landings.

- Data Compression for use on PCs: Lunar-FM achieves a 300x compression of the input data, condensing multi-modal instrument mosaics into a set of embeddings that allows complex scientific investigations to be run on standard computing infrastructure.

- Filling Sensor Gaps and Similarity Search: Lunar-FM reconstructs missing data by learning correlations across modalities (e.g., predicting thermal properties based on topography and optical data), addressing gaps in sensor coverage. Users can easily identify other features of interest using similarity search.

- Geologic Boundary Classification: Embeddings were validated against the USGS Unified Geologic Map of the Moon, demonstrating that the latent space encodes compositional and textural information aligning with human-defined geologic boundaries.

The Lunar Agentic Analyst - talking to the Moon

To facilitate operational science, Lunar-FM comes with a Lunar Agentic Analyst which provides a natural language interface to the lunar data. This system utilizes a Large Language Model (LLM) as an interpreter to bridge natural language queries with the Lunar-FM embeddings, allowing researchers, mission planners and rovers to ‘talk to the Moon’ for the first time.

This "agentic" interface allows researchers to perform complex data science tasks, such as similarity searches or resource regression via conversational prompts (e.g., "Identify regions geologically similar to the Apollo 11 landing site"). The system routes these queries to the appropriate analytical tools within the foundation model, streamlining the workflow for geologists and mission planners.

Commentary

Dr. Abigail Calzada Diaz, a lunar geologist at ESRIC, stated: "The primary issue is the fragmented, multi-source nature of lunar data which until now has made lunar investigations labor-intensive. Lunar-FM provides a standardized, unified data infrastructure enabling knowledge extraction and synthesis from disparate datasets."

She added “Lunar-FM is a translational bridge between pure scientific understanding of lunar processes and the operational requirements of missions,” capable of converting theoretical knowledge into resource-focused targets.

Availability

FDL Lunarlab is committed to open science principles. The Lunar-FM pre-trained embeddings, model weights, and the code for downstream tasks will be made publicly available to the research community after peer evaluation is complete.

The final public release will be at Luxembourg Space Resources Week on May 4-7, 2026.

- The Lunar-FM Technical Briefing is available from Lunarlab.ai

- Researchers interested in being part of the Science Evaluation can request early access on Lunarlab.ai

- Additional information can be found as part of the Lunarlab 2025 results and findings document (which includes a technical memorandum)

Jodie Hughes

Trillium Technologies

+1 307-855-0473

jodie@trillium.tech

Visit us on social media:

LinkedIn

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.